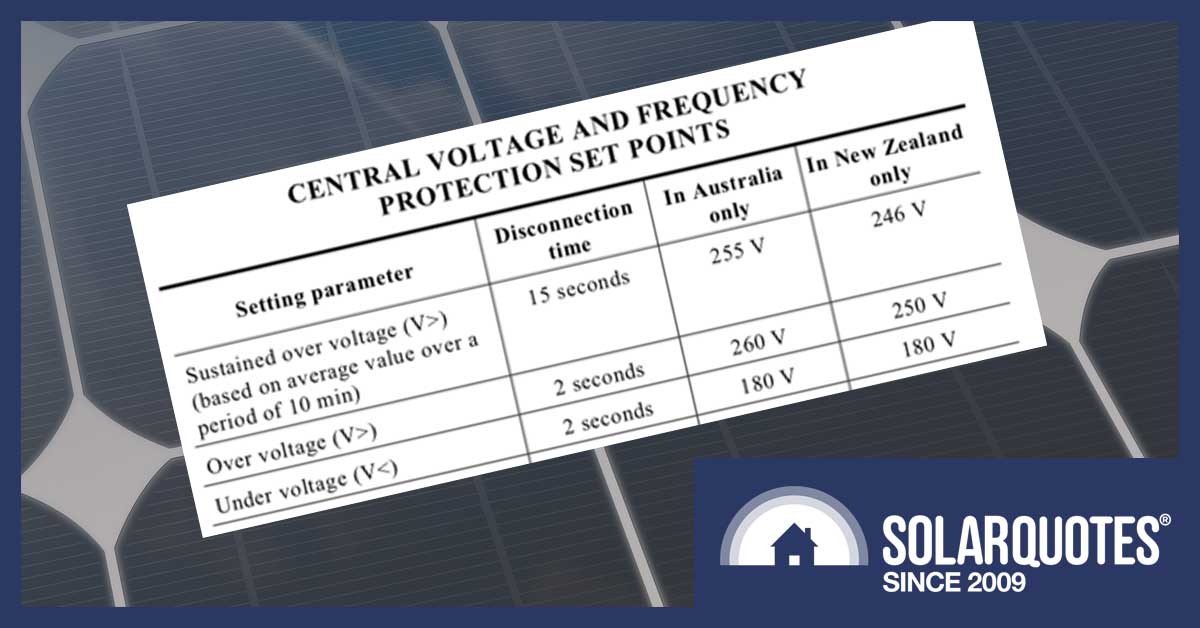

If your inverter sees a grid voltage that is too high for too long, Australian Standards mandate it disconnects from the grid. Before the voltage is so high it disconnects, your inverter may also reduce its power output in response to high grid voltages.

There’s a lot of fear-mongering about how the rise of renewables threatens our power grids, but a real problem getting real attention from the industry is how voltage rises on our mostly old and inflexible infrastructure stops customers from getting the most out of their solar PV installations.

Anyone who’s trained in this stuff already knows this of course, but I’d guess most consumers don’t realise the relationship between the voltage at the inverter and the voltage on the grid is very important. When things go wrong, the customer gets a bill showing far less electricity shipped to the grid than they expected, and someone – a solar installer, an electricity retailer, or a network – gets an angry phone call.

At a recent Clean Energy Council webinar, all four speakers – the CEC’s James Patterson, Solar Analytics’ Stefan Jarnason, SA Power Networks’ Travis Kausche, and SMA’s Piers Morton – agreed over-voltage problems are a big contributor to consumer complaints that they’re not getting value-for-money out of their grid-connected solar power systems.

The inverter has to be running at a higher voltage than the grid, so it can push power out (current flows from a point of higher voltage towards a point of lower voltage, never the other way around). The problem is every solar installation pushing power into the system lifts the network voltage just a little – and with tens of thousands of systems coming online on SA Power’s network each year, some systems are confronted with a grid with voltage outside inverter tolerance (the AS/NZS 4777.1 standard limits inverter voltage to 255V).

It’s worth noting solar power systems aren’t the only cause of overvoltage issues – as Solar Analytics founder Stefan Jarnason remarked, enough overvoltage issues occur at night-time to prove that.

SA Power Networks strategist Travis Kausche told the webinar the state currently has homes feeding 1 GW into the grid; 163 MW of that came online in 2018, and there’s up to 300 MW in the pipeline for 2019.

Kausche said the growth of solar energy “makes the dynamic range [the difference between the highest and lowest voltages seen on the network – Editor] much greater than if there was only load on the network”.

What happens when the inverter has to back off? The customer starts complaining, usually to their installer, that network feed-in tariff payments are falling short of their expectations.

Everybody can do the right thing, but the system doesn’t work right (Image: Clean Energy Council seminar)

That can be fixed, but it’s always better to try and avoid problems than to have to rescue the relationship with a cranky customer – and preventative measures, as well as remediation, were the focus of the webinar.

Scaling The Overvoltage Problem

Solar Analytics’ Stefan Jarnason said his company’s analysis of 30,000 customers showed 50 percent of feeders had overvoltage issues 50 times a year or more, when scanned for voltages exceeding 253V for more than 5 minutes (undervoltage was, by comparison, rare, affecting only 2 percent of customers experiencing 50 or more events a year).

The more granular the data-logging, Jarnason showed, the easier to see the impact on the customer. Solar Analytics’ capture at five-second resolution clearly shows an inverter shutting down because its voltage is too high, trying to reconnect, shutting down again, and so on.

The orange line shows a system shutting down because of voltage rise on the grid, then trying to restart (Image: Clean Energy Council seminar)

Fixing The Problem

The challenge for networks is some of the available fixes are hard to implement on old infrastructure.

Imagine a long feeder serving many customers, meaning that to maintain voltage in-tolerance for the last customer, the voltage closest to the transformer will leave the inverter very little headroom.

As Kausche explained, the network provider can only adjust the voltage by changing the tap on the transformer if the transformer is new enough and has lower voltage taps available – but the majority of SA Power Network’s 70,000 low voltage transformers don’t have that capacity, and transformer upgrades are expensive. Substation transformers are much more capable, but don’t offer granular voltage management.

“We have these legacy old assets that can’t really go down,” Kausche said.

The webinar highlighted the role solar installers can play in remediation, with all three speakers saying that getting inverter installation and configuration right plays a big part in avoiding complaints.

SA Power Networks has changed its inverter rules to require all inverters installed on its network have the Volt-VAR setting enabled (listed as optional in the AS/ NZS 4777.1 standard), so the inverter is more responsive to network conditions.

The network is also working with other providers to produce a standard set of solar inverter settings, so at some point OEMs and solar installers have a standard configuration profile Australia-wide.

SA Power Networks is also trying to move more customer loads to the middle of the day. If an off-peak hot water system, for example, can be remotely configured, adjustment is easy, and from 2020 there will be a “sponge” tariff to encourage consumers to schedule loads such as washers, dishwashers and pool pumps towards the middle of the day.

What Solar Installers Can Do

The CEC’s Patterson pointed out system design, including cable gauge, is also important to manage voltage rise. The CEC surveyed participants to see if they liked the idea of an online calculator tool to help installers design their solar power systems – and received a 100 percent “Yes” vote; so we can probably expect to see that offered fairly soon.

SMA’s Piers Morton said the impact of voltage rise emphasised the need for remotely-manageable solar inverters, something SMA will be introducing in the near future, and said installers can also help by paying more attention to balancing systems across different phases.

If you are solar power system owner with a voltage rise problem Finn has written a handy troubleshooting guide here.

RSS - Posts

RSS - Posts

I had constant dis-connects in summer when my system was installed. You could literally see the voltage rising fast as cloud cover went into a break, then bang, dis-connect. Only to re connect maybe a minute later.

The supplier remotely upped the disconnect voltage a bit above 253v and no issues since.

Same thing happened here to me in Mexico. My installer tweaked the disconnect voltage and no problems since. The learning curve is pretty steep with solar for sure.

Thanks mike.

My street has more than 25% solar.

I also tried calling the power company. They sent a guy out in the evening and said all is in spec!

At that point it was easier to get my supplier to fix it. Never had a problem since. More than a year now.

I work in the garage where the inverter is mounted so i was very aware of it dropping out. Must be many solar owners who will never know.

253.?

235 is the target

Approved imported appliances are all new international standard .100 to 240 volt no plus or minus required according to the SaaS electrical standards mob

253 v any warranty will be denied due to heat damage caused by high voltage . Anything above 245 v appears to overheat these appliances and dramatically shorten life span .it’s amazing the morons in charge have allowed this to happen .

It’s a serious safety risk what they should have done was lower the local grid voltage by installing appropriate transformer infrastructure able to cope with solar feedback.

The higher the voltage the less you can send back and your inverter will not like the constant high voltage either .

Bastards really are peanuts .

As a result your solar output will drop , they are likely limiting you as well and choking your feedback .

And increasing your bill because your appliances can do nothing with the extra voltage but shunt it around as heat dramatically shortening life span ….

They did you no favours at all. In fact skrwd you a bit harder …….

I got the bastards to put up new connection line to pole as the old one was thin crap causing higher voltage rise.

I got down to 251 max but all day long .

Still a long way off 235 our alleged grid voltage and far beyond new appliances capabilitys.

Most new appliances have a small wire which burns out under high voltage heat which usually prevents house fires but if the wire doesn’t burn out for some reason ignites something in the board you will have a fire caused directly by high voltage not a failed device . The safety device might have failed but that would not have been an issue if voltage was 235 v…..

Liability disaster just most sparky will not go up against a corporate and you are left arguing app by yourself against billion dollar corporation who knows they can get away with murder , should anyone’s house incinerate them …imo

We need action .

240 hard cap . Distributers . If they can’t ,renationalise .

Hi Dean,

Here in the UK our voltage standards appear to be the same as Australia. I had the same problems with my grid supply. When we moved here, the RAF base had shut down. Years later they reopened it, then our grid problems started, most mornings around 7;00 the breakers would drop out, reconnecting them for 3 to 5 minutes they drop out again. This continues for about 1 to 1 1/2 hours. Some evenings the same. My voltmeter showed 263V at cut out. Recording voltages over a week showed the grid voltage mean at 245V. This has 2 consequences, first the safety to over voltage spikes is reduced by 15V, leaving 8V for spikes. Second, as electricity is priced in kWh, it means that you are paying over the odds for electricity, since kW = V x Amps, increase the Volts increases the kW to no advantage to the consumer and more money to the power companies.

It’s worthwhile now to repost my earlier post on this subject from Aug 2020:-

The standard range of power quality issues have been touched on.

(Voltage Transient – Sag – Swell – Under Voltage – Interruption – Steady State Distortion – Flicker and Noise).

All inconvenient to some degree for sure, but the elephant in the room not yet mentioned, but one which will cost On-Grid customers cash, and likely to come into force anytime soon, will be a Grid Power Factor penalty rate (PFR).

Advanced functionality grid tie inverters will need to respond to this requirement in design through functioning in a way that will maximise savings across all areas including managing active power and reactive power thus power factor. The further away from PF equall to 1 then the greater the reactive power penalty rate will be.

The grid authority will also have the power to direct inverters inject reactive power to bring power factor into line. Already a common practice elsewhere.

So there it is power factor is king in any generation system servicing loads. Every power equation in AC theory includes the value V (Voltage) including Power Factor PF. As we already know current I is inversely proportional to the PF, therefore if PF is low (less than 1) the current will increase. I is proportional to 1 divided by PF. Resulting in increased I squared R line losses, and lower efficiency of the system. IE higher electricity bills for lower Active Power usage.

Learn about Power Factor. It is the governing electrical concept for all Generation Systems including Roof top Solar inverter Systems. It alone can bring the Solar PV roof top industry to a standstill.

I was having these discussions with Ergon and Energex over 10 years ago representing several Chinese Inverter manufacturers.

PS. Greater conductor sizing to transmit the same quantity of power is not a sustainable solution.

Lawrence Coomber

….sort of ‘Stone Soup’ in reverse.

I asked long ago whether there were any whiz-kids out there that could modify a gee-whiz grid-connect inverter so that it would run a stand-alone operation?

Acquired smattering of what would be involved, but found nobody who’d try it on. (The suggestion that a battery connected inverter hooked into the ‘grid-input’ might work. ) Is that workable?

“The inverter has to be running at a higher voltage than the grid, so it can push power out (current flows from a point of higher voltage towards a point of lower voltage, never the other way around). ”

Well if we had a DC grid the above would be true. But for AC a tad more complicated. Simply increasing voltage could in fact do nothing to increase power flow. What we need to do is in fact try and increase frequency at the inverter. But eventually when we get the power out of the inverter first principles will see a voltage rise

No. It is not an more complicated. Just as in DC, AC also will only flow from high voltage to low voltage…full stop end of story. The size of the voltage difference and the impedance of the link directly dictates the amount of power that flows (or probably more correctly, the amount of power that the inverter wants to put out and the impedance of the link determines the voltage that the inverter needs to apply to export that much power). And this is why voltage “rise” is the problem, because it is often this rise that triggers the voltage porblem in the inverter, ever when in some cases the voltage at the point of connection to the grid might be in spec, and why installers should be doing voltage rise calculations and keeping that in spec by increasing the size of the wires to the house when needed. Frequency does not come into the equations anywhere.

I think you adding 1 and 1 together and coming up with 11 when you bring up frequency. Yes, frequency is important in the management of the grid. Usually when there is an oversupply of power into the network, the frequency will tend to rise, and when there is a lack of supply, it will tend to fall. And this mechanism is used in inverters to throttle supply when things get out of range, This “inertia” from spinning power generators helped keep things in balance. But it has nothing to do with the flow of power. Our grid tied inverters (and all generators on the grid) in fact have to actually match the frequency of the grid as it fluctuates.

Not quite. Changing the voltage on an AC generating device changes the reactive power power output. And that can be either to the generating device or from it. It is the method by which reactive power is controlled from all generators including inverters . And for those states where power is required to be delivered at 0.8PF lagging (from memory) it done by varying the voltage. Wwhich makes the VD problem a lot worse as this any change from unity PF increases current flow and hence more VD.

Real power flows are increased by ‘trying’ to increase frequency. On a conventional generator this is from opening control valves. On a grid tie inverter it is a pile of electronics.

Sorry, to me that looks like adding 1 and 1 and 1 and 1 together to come up with 1111 and in my mind and further confusing what is a comparatively simple issue with half truths. While clearly you have some understandings behind some things, but to me it seems like a case of misinterpreting what you have learned. To me your logical seems to be flawed, probably too much to go into here. BUT you speak with great authority, and if this is backed by real knowledge and experience, I am always keen to be educated by those that might know something that I don’t rather than risk continuing to live in a world of ignorance in the off chance I am wrong. And maybe the accepted laws of physicals have changed and you can educate me about some new development that has changed everything. After all, my Electrical Engineering degree was 30 years ago, and in truth since I have not worked in building generators or inverters, and don’t pretend to be an expert in grid transmission or generation so it is not my area of expertise. But I do have a reasonable understanding of things and probably more importantly base principles that tend to drive this. But maybe there has been fundamental changes which makes a large part of the fundamental principles wrong (possible by unlikely)…..or more likely I was too drunk and did not go to that lecture so happy to be corrected if that is the case.

But the realities is that the laws of physicals, and flow of electricity and power flow do not pander to a bunch of self appoint experts on the internet speculating about it (including myself in that). They are governed by tried and tested mathematical principles. So if what you say is true, can you point to any documentation or equations that support what you are saying, so I can educate myself.

The whole AC is more complicated than DC is really half true. But reality if you look at any period short enough, it is exactly the same and we probably don’t need to confuse anyone sort of an electrical engineer with the complexities. Only difference that does make it a bit more difficult is that capacitance and inductance does introduce some factors that can mean we need to take these into account and this means that voltage and current is always changing and not necessarily in sync with each other as they would be in a static DC circuit (or more correctly they are in sync with each other, but can be phase shifted so current lags or leads voltage which can affect PF). But they don’t fundamentally change the simple principle that power ALWAYS flows from high voltage to low voltage, and as long as 2 AC sources are in voltage sync as they are required to be in the grid, voltage will always flow from the high to the low voltage source. Full stop, end of story. Noone needs to read on from here, unless you are not convinced of this VERY simple principle.

To support what I an saving I only have to point to ohms law and power equations which exactly predict voltage rise and fall on any connection. Most people will know of ohms law as I = V/R. ie assuming constant resistance, we can see that current is directly proportional to voltage drop/rise. Sure, AC DOES make a things a little more complicated deep under the covers because of constantly changing and not always in phase values for I and V. And also because any transmission like will usually have some element of capacitance and inductance, so we need to adjust ohms law to the one that is applicable for for AC circuits. This is almost the identical I = V/Z where Z is impedance measured in you guessed in ohms!! So again, we can see a direct relationship of current to voltage for any particular impedance that can be used to calculate the voltage rise or fall. Notice there is no frequency in this!! But wait I hear you say, because you know enough stuff to be dangerous. Impedance IS affected by frequency if there is any element of inductance or capacitance on the circuit. This is because you know that 2 of the 3 components that make up impedance is because of capacitance and inductance of the circuit. And both capacitance and inductance are directly and proportionally affected by frequency as any 1st year student knows (inductance is proportional and capacitance is inversely proportional). Added to that practically all AC transmission lines will have elements of both inductance and capacitance by their very nature. But this relationship for a transmission line is small because the impedance is made up of inductance, capacitance and resistance of the wire, and the resistance of the wire is I suspect the most significant factor and not affected by frequency. In addition, even if we incorrectly assumed all the impedance was due to inductance and capacitance, in the grid the frequency changes are relatively small proportionately which will also limit their impact. Where I am live, the last 24 hours the fluctuation has been less than 0.4% (despite my generation swinging between 0 and 14kW). NEM I think targets is 6% and things would be going pretty wrong when these events happen and should be very rare. So you can see, in the extreme, the difference might make to I = V/Z is bugger all in the normal operation in the grid and can probably for all intensive purposes, can be ignored. Its impact is a very small % of a very small % making it pretty insignificant. Now we also have the power equations of P = IV. Again, notice, no frequency in this calculation. So if we assume frequency plays a trivial roll in practical power transmission impedance as outline, and if we put these 2 equations together, the ONLY way we can increase output from a power station is to raise the voltage. From ohms law, we can see if we do that, we will output a greater current. And we can see from the power laws those things equate to more power. Notice, while it is hard to say frequency plays no part in this, for the purposes of this discussion it is a small enough part to be ignored, and probably not worth raising it and potentially distracting people from the real drivers.

The whole reactive power / power factor is largely irrelevant. Reactive power is really just a side effective of voltage and current being out of sync, and this does affect the P=IV equation, as you can see different values of VA and W if PF is not 1. But this is a side effect of the inductive load that a is typical of a spinning generator (and other generators and loads), and NOT a requirement. In fact if you want you can correct power factor by adjusting with additional capacitors and inductors to correct PF to 1. This does not impact on the generators ability to output power. But for the purpose of this practical discussion I can’t see any reason it would allow power to flow from low voltage to high voltage which seemed to be the thing you were suggesting could happen and the reason I commented in the beginning.

As for broad statements that “Changing the voltage on an AC generating device changes the reactive power power output” I would love to understand what you are really saying here, and if it is what I think, where you get that idea from or what relevance it has??? Sure all sorts of generators and loads might have a power factor of less than one because of their own unique capacitive / inductive characteristics, but in truth this can all be corrected if required. And in fact, I could be wrong, but I believe your typical grid tied inverter can also output with a PF of effectively 1 or something else if that is what the distributor demands for their own reasons. As a case in point, my grid tied inverter is putting out power with a PF of 1 or close enough to it to not matter. I know this because not only because that is what the grid profile tells me, but now I think of it, I have also measured it (I get the same output from a monitor that does not correct for PF and one that does which shows in it 1). If what you are saying is true, I could never export any power which is not the case.

I think you are getting confused with inertia, and have probably heard of its traditional importance in stabilising grid frequencies. ie when there is sudden drop in supply this inertia can supply the grid for a brief period of time (accompanied by a frequency drop), or if there is a drop in demand, the spinning generators can absorb the excess to turn it into inertia (and a side effect of this will be a small increase in frequency). But AEMO and the generators are working hard behind the scenes to prevent this ever happening and in the perfect world the grid frequency would always be 50Hz at all output levels and at all times. But this does NOT mean that frequency is the mechanism that real power is pushed to the grid. Surely if frequency was required to push power to the grid, we would see high frequency in the middle of the day on the grid when power stations are outputting at maximum load, and low frequencies in the middle of the night off peak times when there is less generation on the grid. But this does not happen!!

I think you need to consider “grid inertia” like a manual car travelling down the highway with the cruise control set at 100km/h. Think of the car like the power station, think of the engine RPM as the frequency of the grid, and think of the hills the change in the load that is required on the grid, and power that the engine puts out as equivalent of the power that the power station needs to produce. In just like the grid with its spinning generators, the car has inertia from the mass of the car moving at 100km/h and interia of the spinning engine. In this scenario, to stick to 100km/h, the engine RPM will be a particular value (lets stay 3000rpm for arguments sake and notice I have said manual car because unlike automatics most people would be aware of there is a fixed relationship between RPM and the speed of the car assuming the same gearing). When we hit a hill, to maintain the 100km/h (50Hz), the cruise control will need to inject more fuel so the engine generate more power (same as power output from the power station in this analogy) to maintain the 100km/h / 3000rpm. We are clearly producing more power, but note there is NO increase in RPM. If the engine is powerful enough and cruise control reacts fast enough and with the added benefit of the inertia, you will stay at exactly 100km/h / 3000rpm. Opposite happens when you go down the hill, still 100km/h, but less power needed so less fuel to the engine, less power is produced by the engine, but still 100km/h and still 3000rpm. In fact you will be able to travel along on your merry way at exactly 100km/h and 3000rpm despite that you might be varying power all the way from zero (coasting down a hill) to 100% for a hill that you have just a powerful enough engine to maintain 100km/h / 3000rpm, That will happen indefinitely until 1 of 2 things happens. 1 is big long steep up hill that your engine is not powerful enough to maintain 100km/h / 3000rpm or same downhill (think in the grid of demand increase above the capacity of the generator), which even with zero power from the engine causes you to accelerate past 100km/h / 3000rpm. Lets focus on up hill. Now if the hill is steep enough to slow you down, but only short, your inertia carries you over that hill, without you even noticing any significant drop. But this inertia is effectively a conversion of some potential energy into actual energy, but you do loose some potential energy / inertia you will need to provide power to regain (shows up as a small reduction in RPM). But if the hill is longer, pretty soon, your speed and RPM will drop because you just don’t have enough power and if not addressed and the hill goes on, the car will slow until it all stalls because you have run out of power (Assuming you don’t change down a gear in the car, which is the answer in the car because you would prefer to get up the hill slowly than not at all, but this is not an option in a power station that helps the grid, because while change down a gear might increase torque, it does not increase power, so in your power station, it might help you maintain frequency, but not power output which is the important thing for a power station in times of limited supply). Same happens in the grid, the frequency drops. But it is not the drop in frequency that is the real problem. The problem is that the generator is now not spinning as fast, and that means less power and struggling to maintain voltage high enough for demanded power. As per equations above, lower voltage becomes lower power, and suddenly unless you can rapidly introduce more generation, or reduce loads, the whole system collapses, and this is what causes the blackouts (planned to protect the system, or unplanned that takes everything out).

Now I hear you say that this is evidence to proovde your point, because the car engine is trying to rev at higher RPM and this is what is producing the power. But that is putting the cart before the horse. And as can be seen from above, in most cases the power output can be varied from 0 to 100 without changing the RPM/Hz. You are pointing of the side effect that does not actually happen most of the time, and making it the cause. Reality is much simpler in a power station. They are built and are required to maintain frequency, not increase it, or decrease it. They push power power out by rising the voltage. On our end, it is exactly the same and practically nothing to do with frequency.

Additionally, if it was frequency that was needed to push power through the grid, and not voltage, surely we would see the frequency drop as we move through the grid from the generators. This does not happen, it is voltage drop we see, with the frequency largely the same.

Finally, your original comment that I felt needed correcting was “Simply increasing voltage could in fact do nothing to increase power flow”, I guess if you could give me 1 real life example in the grid were this could be practically true. Example needs to be 1 where power can flow from low voltage to high voltage in a real life grid where everything is in sync.

Now maybe I am missing something fundamental, and if so my apologies and I would really value being educated. If so, suggest you point me at the reference or calculations to the support your claims.

Too much electro-wank here!

This is all way beyond the capacity and interest of any but the most dedicated solarist.

Tone it down,please. This discussion should be taken offline where you electro-donks can indulge to your hearts’ content.

Richard I’ve not actually read Mathews post so can’t comment on it. If it’s too complicated for you then maybe you are in the wrong place. There are many here that have views and the conversation may get somewhat technical as many of us are engineers of various degrees and want to have some input by putting their views forward.

Matthew, I think you’re confusing current flow with power flow. In DC systems, both are always in the same direction, but this is not true for AC systems. You can have the current flowing in one direction and the power flowing in the other direction.

In a standard 50 Hz grid with a source and a load, the current flow direction changes 50 times per second, the power flow, however, is always in the same direction. That’s why I tend to agree with Peter, but I would like him to develop his line of thought.

Jeez Esdras – I think you might be trying to teach Matthew to “suck eggs”. I will agree that Matthew’s “missives’ are so long, that by the time I get to the end, I may have forgotten the beginning – but I do believe he understands the principles of AC theory.

Please realise that the voltage also changes polarity 50 times a second.

So, for a purely resistive load both the current, AND the voltage change dirrections at 50 Hz, so the power is always positive.

However, what you have said is not always true. If the load is other than purely resistive, at some points in the cycle the current and voltage will have opposing polarities – and the power flow will be negative (the load will be supplying power back to the load). The amount will depend on the “power factor”.)

This is not rocket science – the lower the power factor, the greater the current flow, for a given nett power delivered.

This is why DNSPs want PF close to 1 – as otherwise they lose the additional i2R losse in distribution – which they cannot charge you for.

I think the graphic CEC is missing a 2 in front of the = 58v which should be = 258v unless I am missing something.

Well spotted. Fixed. Thank you.

Richard,

Solar Edge inverters can be voltage limited instead of power limited. My system automatically winds back to control the voltage, so I actually help my neighbors too. My best export was 85Kw/day last christmas. (14Kw panels, 2x 5Kw Solar edge inverters. Replacement legacy system.)

On my system, the calculations said 8.4Kw, but by using Voltage limit, I can sometimes approach 9Kw (depending on what my neighbors are exporting).

regards,

Doug,

I think when you say “voltage limited” you are referring to “volt-watt mode” which is an optional mode in AS4777.2:2015 that pretty much all the mainstream inverter manufacturers implement. You can read about it here :-

https://www.gses.com.au/wp-content/uploads/2016/09/GC_AU8-2_4777-2016-updates.pdf

Effectively, if enabled, instead of the inverter doing a hard shutdown when various voltages limits are reached, this mode allows for slightly higher voltage limits. But the trade off is that you will ramp down output, and in fact the ramping down of power starts at a lower voltage.

Overall, this is better for stability of the grid, as it enables a mechanism where output is reduce when there is oversupply, and also avoids some big swings in power if big groups of inverters where all going from full power to shutdown at the same time. But for us end users there is some upsides and downsides. The upsides is that it typically raises the bar for the voltage that the invester actually full shuts down. This is further helped by the fact that when you ramp down, you reduce the current and this also reduces the voltage rise that you are creating by generating power and trying to push up, so there is effectively a double benefit here if you only cared about preventing inverter shutdown. So for anyone who implements this, there is likely to be a significant improvement in the numbers of shutdowns if their voltages are fluctuating around the limits.

But, the downside is that the trade off for this, is that voltage limits which you must ramp down are lower than the trip voltages. So while you might trip off substantially less, you are going to be spending more time throttling your output and that throttling will be starting at a lower voltage. This means you will be spending time throttling your power, where under the standard mode, you would have been able to output 100%. For for some people implementing this, it will actually reduce their overall output if their typical voltages are above the ramping voltages, but below the cutoff voltages. Some people it will increase their output because it is better to be outputting 20% than 0%. But there is no doubt that for everyone, it will significantly reduce the number of cutoff events.

There is no doubt this is good for the stability of the grid, so I don’t want to suggest there is any conspiracy here. But there is some truth to the fact that there will be a lot of distributors and solar installers who will love this mode, because it is effectively enabling them to sweep the over arching problems of over voltage in the network under the carpet and reduce the pressure to fix what might be an expensive problem to fix. For most people, if they don’t notice the inverter shutoff in the middle of the day, they are not likely to be aware that they might be having significant amounts of power throttled. Even for people with a good amount of awareness of what their panels should be producing can find it hard to distinguish this throttling from other factors that reduce output (ie cloudy day, heat, dirty panels, the fact that panels never produce rated capacity etc etc) until the problems is considerable which again is probably sweeping the problem under the carpet.

It is worth pointing out, this change is nothing to do with “power limiting”, and both modes of operation are still voltage limiting. And only happen when high voltage is an issue, which should be very rare if the distributors provided voltage to the required spec. They are just different forms of voltage limiting with different pros and cons. As for “power limiting”, all inverters and modes are in fact power limited if you have enough panels and solar generation to exceed the rated output of your inverter. If you have 5kW of panels and 5kW of inverter, you will never hit this limit. If you have 7kW of panels, and 5kW inverter, and the panels all face the same way and are optimally orientated, there might be times in the middle of the say where you are hitting this limit. But this is nothing to do with any additional throttling due to voltage rise.

Now the mode is there for real grid stability reasons, and under extreme events (ie storm brings down major power lines and isolates a city and there is a spike in voltage due to excess supply of power until all the generators can ramp down). And it is totally reasonably that solar owners like all generators help rather than hinder the stability of the grid. BUT these “over voltage” conditions should be rare and short (at least until we have much higher levels of RE penetration than we do) and should probably only happen at times when watching the news would tell you about the conditions this should be happening. Otherwise your distributor should be providing power within spec, which should be (taken directly from Ausgrids documentation) :-

Ausgrid’s steady state LV supply voltage is within 216V to 253V at connection points under normal conditions.

If the distributors supplied to these standards, and solar was installed to spec, inverters tripping would be very rare indeed, and we would not be having this discussion. So lets keep the pressure on to fix the underlying issue.

Electrician with essential energy’s permission upped mine to 282 V Essential then ran loggers for a week did something to the grid and no more problems I asked essential tech did I need to get inverter lowered back to 255v He said no no need from upwards of 30 or so disconnects to zero

Hi Peter

Really? I’d think you’d have a job on your hands to increase the inverter frequency against the massive inertia of the grid.

Thinking of a pole transformer, I’d think power would flow either one way, or the other, depending on the voltage ratio and the voltages on each side. A sufficiently increased voltage on one side would cause power to flow towards the other side. As far as I know, pole transformers do not (cannot) change frequency.

Ignoring power factor for the moment, P=VI in an ac circuit as well – so increasing voltage WILL increase power. Also I = V/Z, where Z is the impedance – so I will go up as well. The power factor deals with real and apparent power (W vs VA).

Unless you are thinking of a phase shift?

Umm – except exact words were ‘try and increase frequency’. Same as when you open the control valves on a steam turbine. But phase shift does cover it, It is known as mechanical phase shift. The position of the rotor c/w electrical phase.

Geez – wishing I hadn’t raised it now. :-). A distraction on what is an excellent piece of where standards fail to align for the benefit of consumers.

I had all sorts of problems with Voltage Rise afetr I had solar installed. In the end Power SA had to replace the line back to the Stobie pole at their expense, this reduced the voltage rise from 6 down to 3 volts when I did my own load testing and I’ve not had a shutdown event since but it’s difficult to achieve a full 5 Kw yield in summer in the middle of the day once the 253 volt level is reached and starts backing off. If its really hot and people have air cond running I can generate 5 Kw but that only happens 2 or 3 times in summer.

The line (and cooked fuse box) was only replaced after I told them my room light was flickering one afternoon, light in fridge also was doing the same thing. They fixed it later that evening. Obviously by this stage it had become a fire risk. I would not be surprised if what I was exporting was heating up and cooking the fuse box because of bad connection. Even so this is their problem to have infrastructure that is rated to handle the current in either direction.

Given my time over again I would have monitored and logged the voltage coming into the house so that I could make a better informed decision. This is something that should be done before solar is even installed. Voltage here last time I looked is always over 240 volts regardless of time of day. I sent Power SA logs and graphs of the issues I was having and gave them a link where they could view it in real time.

I think it would be better if we had small transformers on each pole feeding fewer houses to make it a more even playing field.

So the next house you look at buying first see how close to the transformer you and if close say to Real estate agent, sorry not interested, it’s too close the the transformer.

I have Off peak water heater but Power SA refused to move it to the day time hours when I requested but now with higher export rate I think I’m better off the way it is.

Hi Stewart, in regards to your off peak heater. I have a time of use meter here in Sydney with off peak, shoulder, peak and control load 1. What you could do with your hws is what I did. I have two live wires going to my hws. One is off peak and the other is constantly live. I have these two wires connected to a 20A switch (which is mounted on the hot water heater) When there is going to be rainy days ahead, I leave the switch to off peak, when there is sunny days coming up (I always look at the forecast). I will switch off the hot water heater from the fuse box so it will not heat up during the night. During the day (sunny) I will switch on my hws to heat up from my solar system. (flip the switch from off peak to constant). Yes you could sell back to the grid for 12.5c per kw/h and leave the hws on off peak permanently, buy here in Sydney, my off peak rate is charged at 17.5c per kw/h so I’m ahead by about .5c per kw/h by heated up the hot water during the day from electricity produced by my solar system.

If you don’t mind the inconvenience of flipping a switch and keeping an eye out on the weather, then this will work for you. If your one of those people of set and forget, then this will not be for you. Also consider downgrading (smaller) hws to match your family members. My original hws was 400 litres big blue, but since the kids moved out (except for one, we now have 3 adults living at home) I changed my perfectly good working hws to a 125 litre stainless steel hws that I found on the cheap of ebay. Hasn’t missed a beat since the day I installed it 3 years ago, and we have never ran out of hot water either, on a few occasions when the misses does a couple of loads of washing and washes the dogs (3) then I will flick the switch to constantly on and heat up the hws so we don’t run out when we have showers at night. This system has been working great for me and saving good money. Hope this helps somewhat. Cheers, Tim.

p.s. do your calculations as I don’t know what they charge you for off peak and how much they give you to sell back to the grid and see if it’s viable.

Hi Tim.

I think you are talking about the old style day/ night switch set up and that is not allowed in NSW if you are on a controlled load tariff and has not been allowed for years.

Cheers.

As a comment, in NSW it seems that if your overhead cables are 16 sq mm, they will upgrade the feed. You may need to request tho…

I noticed last summer that my new Solaredge inverter was regularly shutting down (maybe several times a day for about 30 minutes a time) despite cloudless skies (yes even in inner Melbourne). I was advised by my installer that this was probably because of voltage rise. How does the grid “decide” which inverters to disconnect? Are newer, “smarter” inverters more likely to be shut down than older ones, thus bearing a higher burden?

John,

you are caught because there are probably old inverters feeding into the same transformer. The newer inverters have tighter voltage control than the older inverters, so will disconnect first. I suggest you ask your installer to change the inverter to voltage feed control (available with Solar Edge inverters). What happens then is the inverter will roll back to control the voltage. This means you do not disconnect, but instead the inverter output reduces. This sounds like it would greatly reduce your export, but the reality is it makes very little difference, & because you are feeding continually when the power is available, actually can increase the output.

Also check the voltage: if it is high continually, ask the power supplier (Poles & Wires, not retailer), if the transformer voltage can be set lower. (The Grid export voltage is readable on the inverter menu).

What Doug calls “voltage feed control”, I suggest you call “volt-watt mode” as this is what it is called in AS4777.2.2015, so less likely to cause confusion because there is always some sort of voltage limiting. See my post above in reply to Doug’s previous post if you are interesting in more technical detail.

Though it is worth noting, under AS4777.2:2015 if a manufacturer implements volt-watt mode (implementation of this functionality is optional under the standard), then it must be on by default. So assuming AS4777.2:2015 Solar Edge inverter that supports volt-watt mode (which it very likely does if it is brought post Oct 2016, and the fact that you have a problem suggests it is more likely post 2016 inverter, as the pre 2015 standard had much more flexible voltage limits), it is probably already on. HOWEVER, it is probably more about doing everything you can do to set ALL the voltages values as high as they can be set irrespective of if that is with volt-watt mode or not. There are ranges of values that can be set, and there is often ability to set higher than the “defaults”. But you are limited in what both is allowed under the standard, and the limits that your distributor sets.

It should also be mentioned that “volt-watt mode” does not prevent the inverter shutting down. It just raises the bar by about 5v. But it still must shutdown when that voltage threshold has been reached. Mind you, if you have set the highest voltages you are allowed under volt-watt mode, and you voltage rise to the point of interconnect in the grid is within spec, and you are still getting regular shutdowns due to voltage rise, you should have a lot of evidence to put a LOT of pressure on your distributor to fix the issue as clearly their grid voltage is VERY out of spec and with the right technical knowledge it will be very hard for them to argue otherwise.

As for the length of the shutdown. There are lots of different voltage limits under AS4777.2:2015. While volt-watt mode might allow you to keep generating 20% of power up to 265v, but once it trips over this and has to shutdown, the inverter then needs to follow a different set of rules before it can restart. I would need to read the standard again, but I believe that means that the inverter can’t start again till the voltage is below 253v (and it might evenbe configured below that as that is the maximuim)!!! So if your voltages does not go below that, it can never restart, even though it might be at voltages that it would be able to run if it had already started. So it only takes a small voltage rise of 2 seconds or less to switch it off, and then it might take a long time to come back even if the voltage drops substantially from the 265v.

As Doug says, it is not the grid that decides what to disconnect. It is purely your solar inverter that decides to disconnect in complete isolation to everything else (as every other inverter on the grid does the same). And the only things that is used to make that decision is the voltage that it “sees”, and the configuration parameters configured in the inverter. Lots of things play a part in that including :-

– grid voltage at your point of interconnect (hassle your distributor to deliver you power that is in spec)

– voltage rise (or drop if you are net importing) of all the wire between the point of interconnect and your solar inverter. Make sure all your connections as good, and make sure all you wires are big enough for the size of the solar to keep the voltage rise to less than 2%, and the bigger the wires the better, because it all adds up.

– what values are actually configured in your inverter (speak to your installer and make sure they have set everything as high as they can set them).

Suggest working on all of the things above to fix your problem.

Hi.

Valuable and revealing article.

Suggest look at nulux and ecojoule in the illawarra for the next piece on available solutions for grid overloads and imbalances.

Good luck

R

Our Grid voltage for Australia has been reduced from 240V to 230 Volts, but someone must have forgot to tell our network operators, as almost all old and new pole and pad mount distribution transformers are set with a secondary output voltage of 250 Volts from whichever High Voltage it is built for, 11kv, 22 Kv or 32 Kv, this was fine for the old standard voltage of 240 volts most network operators allow 10 volts drop under full load from the transformers low voltage terminals to the furtherst point of common coupling on that transformers LV distribution line which would leave a voltage of 240 volts, with today’s standard of 230 volts the transformers outputs should be set to 240 volts allow a 10 volt drop would give an output of 230 and prevent voltage rise issues during the day when solar is feeding back, this would require every distribution transformers Taps to be changed individually or an easier way would be to reduce the high voltage to all these distribution transformers via the transformer in the zone substation by 2.5% and almost all voltage rise issues with solar would disappear, but getting network operators to change there ways is like beating your head against a brick wall

Lowering the voltage in the HV feeds also means the current in the cables would increase.

High voltage feeds are used to make it easier to deal with losses in the cables and also allows thinner cables to be used, These would have been designed for a maximum current level.

Our inverter was tripping out and the line voltage was peaking at 276v AC RMS!!! We logged a fault and they retapped our transformer on the street, and we are back around 250v. I wonder how long we were up over 270v as I only noticed the inverter was tripping out by pure accident.

As I understand it, to go over 258v output from your solar PV is absolutely illegal (for safety reasons), so if this is happening, the settings on your inverrter must be wrong. You should get it checked ASAP. As mentioned elsewhere, the inverter must push power out a couple of volts higher than the grid to allow export.

Even 250v is at the high end of the allowable range, and should not default around there.

The main issue is here is too many volts on the local house wiring will place stress on appliances. In such circumstances the installer needs to do the proper load testing procedure to determine what is being lost.

There will be some resistance in the cable running back to the pole the house is connected to. This will drop volts across it and will result in wasted power but is normal (no such thing as a perfect conductor) as long as it’s in good condition and within specifications.

The Grid will act as a voltage CLAMP so will limit the voltage depending on what is being used on the grid at any point in time. If not much power is being consumed on the line by others then your potential exported power won’t be fully realised as there insufficient load to clamp it and as a result you end up with more voltage rise at the Inverter output.

I wish that statement was true, because if “safety” of voltages above 258 was an issue, that it would be much easier to get traction with the distributors to do what they should be doing to tap the voltage down to the levels that are required to provide. But it is not a safety issue as the network needs to be specified to safely handle voltage well above this, and you will see spikes in the network well above this from time to time as a matter of course.

As further evidence of this, there will be a crap load of solar inverters out there configured with voltage cutoff parameters set well above 258. You would need to comb the fine print of various AS4777 documents about what was allowed, but caring much about voltages (at the 253-258 level) was a recent thing. But I think you will find it would be pretty standard for pre AS4777.2015 (only mandated for sale from the end of 2016) will be routinely set with voltage cutoffs at much higher values. For example my pre AS4777.2015 is set to 270v.

Even AS4777.2015 inverters are allowed to output 265v under particular circumstances (volt-watt mode).

The voltage issue in the grid is largely with the distributors not tapping down to the required standard which was change a while ago down from 240v to 230v (assuming proper install and ensuring your voltage rise is in spec). But even before that they tended to run on the higher end of the voltage range I assume because for them, lower voltage was more likely to create bigger problems than higher voltages. As as seen here, volts tend to be well above that standard. This is compounded by the fact that the distributors are loathed to do anything about it. So when they deliver something out of spec, and we have new inverters that assume distributors and delivering to spec, then we have the issues.

Now we need to be careful about coming up with all sort of conspiracy theories about why the distributors don’t fix it. Eg I think the theory is to sell us more power is pretty baseless, and for most things the voltage will not make much differences to the amount of power we buy. As others have said here most inductive loads will only use as much power (which is what you pay for and not volts, amps or even VA) as they need irrespective of voltage, your hot water heater and other restive loads might draw more power with higher voltages, but that usually only means your hot water heats up quicker, and shuts off sooner for the same overall power draw. There are only a few things that might draw more for little benefit, but even they are getting less. eg if you have incandescent light bulbs, they will draw more power and burn brighter. If you can then turn lights off because others burn more brightly, it would be a zero sum gain, but I guess in other cases this is not practical to do, and you will use more power and live in a brighter house and probably have bulbs burn out a little sooner, and other electronic components not last quite as long as they otherwise might, especially if they were designed for lower voltage (but this is probably no a massive big deal, as a lot of things are now designed for a wide range of world voltages).

Maybe you could argue that the whole industry is tuned to discouraging solar. But I suspect there is no conspiracy here, but just no particular gain from the distributors to fix, so low on their priority list.

Arguments that they can’t turn the voltage down, because this will increase current and potentially overload the network I think also misses the main points. 1) we are only talking it down to be with the national spec, so we are not talking about turning it down to a point where this should be an issue. And 2) turning down voltages does not necessarily lead to increased currents. For example, turning down voltages will reduce the current draw of resistive loads like your hot water heater. Sure, devices that use SMPS etc, are likely to draw more current to ultimately draw the same power, but there are swings and roundabouts, and if they were still delivering to spec, it would not be an issue. And if it was, it is definitely an upgrade that should be happening anyway.

The reality, is there are good reasons for the voltage limits in solar inverters that are generating power, and it has nothing to do with safety. And the more solar there is there, the more important these limits are. For example, when there is more solar than demand on the grid, if voltage rise is not checked by throttling and shutting down inverters, there will be more problems. So the rules are there for perfectly valid and good reasons. But what is missing is that we have a grid that is not delivering to spec, and when this happens we are being curtailed when there is no good reason to do that, other than the default grid output is too high, and nothing to do with oversupply of electricity. Effectively we are playing by the “rules”, but the distributors in many cases are not.

But the real reason it it probably difficult to get traction, is it is probably a difficult and expensive problem for them to fix which is a problem for all of us. I think you will find there is significant parts of the grid that are not built to a sensible spec for today’s expanded requirements. When cable size and runs between transformers was specified and installed, we probably all used less power. This means less current and less voltage drop between start of line and end of line. As we have all squeezed more people in, added more pools and pumps, added more AC in more rooms, and got bigger TVs and of course added more and bigger solar systmes etc, are have probably pushed the limits of the system, with more people more out of spec for more of the time. This is all compounded by lack of flexibility to tap down transforms locally which were designed for higher voltages, problems at the end of lines if we reduce at the substation etc etc. Solution is to come back and fix everywhere that is potentially a problem. So it is a whole of system fix that might include reducing voltages at the distributor, tapping down the transformers, replacing transformers, increasing the number of transformers, to reduce the cable runs and increasing the size the wire. All this is not trivial or cheap. And so if the only people hurting is not you or anyone you overly care about, the motivation is probably low to fix it for the distributors. This is especially true if the people that are hurting, and also complaining about high power prices and you know the fixes are going to cost money, and push up prices.

So what is the fix. I recon the fix is to not ask people with solar to bear the burden (though I am sure Angus Taylor, Craig Kelly and others in power would have very different ideas). If the system is out of spec, why should we be curtailed. If the distributors don’t care enough about grid voltage to tap it down in our street and the high voltages is NOT due to oversupply of the grid, why not let us adjust our solar voltage cutoffs to be representative of normal grid voltage. If the nominal grid voltages are too high in our area, why not let us adjust up. Now this does raise a can of worms that are hard for the distributors to manage, so that is why you probably have not officially seen this yet. But probably also why as far as I know distributors are not driving around and enforcing maybe slightly dodgy fixes your solar installer has probably done for you in the lot of cases to avoid these real world issues.

Summary is, I suspect the problem is a bit bigger than anyone wants to acknowledge and fix, and easier just to ignore. And hopefully the distributors and fixing things slowly as they go as and when they can????

Well Matthew, we had high voltages on our incoming mains – often close to th regulated limit – and I had noticed we were often “blowing” CFL & LED rated at 8,000 hours, within 500 hours.

I was worried about our TV & Amplifier etc., so fitted an auto-transformer to drop the supply by about 12Vac. Monitored the power (at the GPO, with a true-power meter), and saw a 30W decrease. So the heat dissipation in the TV switchmode supply was reduced.

Point is, higher voltages:

1. Increase power costs, and

2. Reduce equipment life.

So – I don’t agree with some of you premises – with hard evidence to back this up.

Ian,

Re your comment “that higher voltages increase power costs, and reduce equipment life”, I think that you have misunderstood my post. All I was trying to say, is that it is complicated if you understand the electrical engineering behind it, and it can’t be summarised into simple statements like the one you make without there being many exceptions.

Lots of people assume that high voltages are the enemy, because our voltages in the vast majority of instances are significantly higher that the spec and thus the sweet spot for what things are designed for. But many do not appreciate that low voltages, significantly below the spec are equally potentially damaging for a different set of reasons and equally can increase power consumption. The grid is the classic case, and often the damage and outages in the grid, are actually caused in times of peak demand when voltages sag, and this voltage sag causes currents to rise as inductive loads (think AC on hot days) need to draw more current to offset the voltage drops in a cascading spiral down until circuits blow and transformers melt down to overloading the circuits with current etc.

So the real issue is not high voltages, or low voltages, it is voltages falling outside of the specifications that everything is designed to run. Now in Australia our problem for the vast majority of the time is the high voltages, and there is no doubt we are paying for that in one way or the other. And it really should be fixed. But I doubt there is any great conspiracy here to make use use more power or anything else. It is just a combination of people being lazy to fix what is a complicated issue, and the fact that it will be expensive to fix, and there are already lots of people complaining about the cost of power, so there is probably very little political will do do something which can only raise power prices. Better to leave it as a relatively “hidden” cost what people are not aware of. Also drop in voltage, probably leads to less headroom on the hot day in summer where there is more demand than supply and we get things breaking because of low voltage. So it is all a compromise that is expensive to fix without making other compromises. But thankfully, it looks like there is an increasing awareness of the issue. eg https://www.abc.net.au/news/2020-08-17/solar-powerlines-already-over-voltage-limits-unsw-study-finds/12534332.

ABSOLUTELY, higher voltages than the spec that appliances are designed for will absolutely reduce the life of many, if not most, appliances. This is definitely true for resistive loads and for different reasons, and many non resistive loads as well. The classic case is the incandescent light bulk, which will burn brighter for higher voltages, but also burn out sooner, and in some cases massively sooner. Ohms law I = V ÷ R tells the story, and with higher voltages with a fixed resistive load, leads to higher current which in turn will burn the light bulb out sooner.

Also as you state, in a lot of cases high voltage will increase power, or at least appear to increase power usage. Bottom line is for resistive loads, increasing voltage, will increase the current across a fixed resistance and this will increase the power used (ie I = V / R and P = VI tell the story). And at any instance, this will be totally obvious when you measure it. I can see from a power monitor, my HWS appearing to use more power when the voltage is higher, and less when it is lower, as is totally predicted by the formula’s above. HOWEVER, this extra power is actually being used to heat the water in the HWS faster, so in reality, I am not using more energy in KWh because all the happens is I use more instantaneous power, but the water heats quicker, and shuts down sooner so amount of energy in KWh is probably very comparable. But in the case of an incandescent light bulb, you will use more power with higher voltages, because it will use more power at any instant, and you will likely run it just as long so yes, it will use more KWh.

But when it come to typical inductive loads, the exact opposite happens. These loads typically only need a certain amount of power. So with P = VI if we increase V, the I (current) decreases to give fixed P (power). So in simplistic terms, increasing voltage just results in a reduction in current, and you use the same power in theory. And in truth, if you then include efficiency losses in the wiring for these devices, higher voltages will actually reduce the power draw. This is because less current in the wires feeding these fixed power devices will result in significantly lower efficiency losses. This is exactly as predicted in the power formula P = I^2 x R. Notice that I^2 is I squared. So from this we can see the Power losses go up exponentially with current rises which would happen if you lower the voltage. The classic example as proof of this is our power distribution grid. There is a reason the networks go to lots of expense to distribute the power with very high voltage distribution network (eg 330KV). The higher the voltage they can run, the lower the current they will need, and that will reduce the efficiency losses as predicted with P = I^2 x R.

Though there are swings and roundabouts and it would not surprise me to see inefficient inductive loads that loose more to these inefficiencies at higher voltages and maybe your TV is just such and example. Or even loads you assume are inductive, but have a high enough resistive component that the higher voltages result in higher currents in the resistive parts of the circuit and more wasted heat and losses.

So while there are examples of higher voltages increasing power costs and reducing equipment life no doubt, there are also many examples when higher voltages can reduce power used (eg high voltage transmission infrastructure and even the resistive losses wiring in and feeding your inductive loads) and reduce equipment lifetimes (eg burning out circuits due to high current to feed resistive loads).

Anyway who lives in the US would have a better understand of the many issues of low voltage as they wrestle with their 110v supply. So it is definitely not a case of “high voltage bad, low voltage good”. The important things is it is in spec so everything can be optimised for that spec. Anything outside of the spec will more than likely be bad be it on the low side, or high side.

On your light globes, if you are consistently only getting 500 hours for 8000h rated globes, I strongly suspect there is a lot more to that story than simple voltages up around 253v. I suspect your power might be a lot worse than you think, or the quality of the globes not up to scratch, or the quality of your power is a lot worse than you realise. To put into context, I have voltages that are consistently 245v to 258v at the place I have been living for 6 years. In fact for half that time, the voltages were consistently 4 volts higher than that. I have about 150 LED light globes of various different types, and in that time, I have had literally 2 blow. Both of these were very cheap GU10 ones in an outdoor light in the weather and I make excuses for those ones. Most of them are dedicated LED downlights I replaced halogens years are with. Many of these would have done over 8000 hours (many less…but I suspect most would have already done well over 500 hours). I do have a smattering of old CFL that occasionally blow, and my gut tells me they are a little less reliable than the LED, but WAY more reliable than incandescent even with the high voltages. But I only still have a couple of CFL because I already had them and I suspect there would not be many that would have less than 1000 hours, and probably MUCH more.

As for your 30w decrease for for your TV with reducing the voltage by 12V, you may will be right. However 2 comments. That suggests either 1) you have a pretty crappy and lossy power supply with with lots of extra losses related to the higher voltage, or the explanation is brightness of TV is not well regulated to protect against fluctuations of voltage and so lower voltage leads to lower brightness and less power, or 2) you have failed to account for the fact that it is probably almost impossible to take a reliable baseline power measurement for a TV unless you have a consistent test pattern because how much power a TV needs will be VERY dependent on what is on the screen and very small changes in brightness can make a big difference to power draw. And even warm up can make a difference to power draw. So unless you have accounted for that (maybe pause a single picture and take readings after everything is warn), then estimated reading can be misleading. Also note, that lots of these power meters are not very accurate and fail to deal with power factor and less and perfect sinusoidal waves.

Anyway, I am interested in your auto-transformer. What model is that and are you happy with it? I would be interested in your readings on your auto-transformer. In my experience these things create as many problems as the fix. In particular, have you measured the efficiency losses that it almost certainly introduces, as I suspect in many cases, it will be more than you might gain from the voltage drop unless you have particular applications.

If you have the auto transformer and accurate meter, I recon more experimenting with more loads would be educational. Ie use it to to boil your kettle with a fixed amount of water (simple totally resistive load). There is no doubt you will see higher current and power draw with higher voltages. But if you can accurately measure KWh or time how long it takes to boil a fixed volume water, it will be quicker with higher voltage and the KWh will be about the same. I think you will also be able to find inductive loads in your house which will draw similar power as you raise the voltage (eg play with a fan which is more of inductive load).

But again, what is import is the grid to supply us with a voltage that is close to spec so that appliances are built to be optimized for this voltage and if the DNSP do that well there will be no issues.

Hi Matthew

Had to resond here – no more indents!

I feel you have failed to consider an important issue – saturation in transformer cores.

Also, input capacitor ratings in switchmode power supplies.

A lot of household equipment, computer monitors etc., are placarded as 230Vav devices – and would not have huge margins on approach to increasing level on saturation (a non-linear characteristic) – as much as any reason to minimise material/weight costs.

I do of course understand how resistive loads opetate – interesting enough, reduced voltage allows for more self-use of solar power – albeit at the cost of a longer time to boil a kettle.

Not sure your comments about inductive loads is necessarily accurate – after all, the formula for calculating current in a practical inductor (having a series equivalent resistance, so the current won’t lag by 90 deg, resulting in zero power draw), shows current increases with voltage. So, inductive loads change the power factor, which will increase i2R losses in wiring unless correction is applied.

Hi Ian,

I suspect we might be mostly in agreement. ie voltages that are at the high and or above the spec on the whole are not going to be good for appliance longevity, and in some cases will increase power usage.

But I was just trying to bring some balance to it, as I suspect a lot of people don’t understand the detail and over estimate the extra power drawn from high voltages and think it is some conspiracy from the DNSPs to make you draw more power. Certainly some appliance will draw more power as you have observed, but many will not use significantly more. And some of these extra power draws will be offset by the fact that devices that require regulated power draw (ie AC many units for example) might actually draw marginally less power because with these appliances. High voltage means low current draw which means less losses in wires and IF these gains are less than the losses in the appliance due to higher voltage there could be a net sum gain.

What I put above was meant to be kept as simple as possible and in layman’s terms. I was not meant to cover every corner of a complicated area with many things that factor both sides of the equation. Lots of what I said is over simplification if put under the microscope there would be lots of exceptions (ie I should not have used term inductive loads, and probably should have said regulated loads). But this is not the forum for all the detail so I have attempted to summaries and highlight a few exceptions for your consideration. It was just meant to highlight a few “swings and roundabouts” on a topic which is often considered one sided.

After all this is accounted for, do think your typical household will use more or less kWh with higher voltages?? Yes, my guess is the typical household will use marginally more once all the swings and roundabouts are factored in. This is because there are loads that will absolutely use more power. ie incandescent light will absolutely use more power as predicted by P = V^2 ÷ R which is an exponential rise in power with voltage (assuming you don’t value that extra light that it will also output or turn more lights off to balance out). Other loads that will ramp up output with voltage will also result in power that is ultimately wasted. There will be some loads that will use less power, but these are probably rarer, and the saving likely much smaller where they do occur. Then there are the bigger loads that probably won’t use much extra power at all (inverter AC units, Hot Water heaters, kettles etc). But certainly once you consider all of this, it is very likely that most households will draw more power with higher voltages, but my guess it is nowhere near as much as many people think.

FYI, I did a quick test of my TV (large, modern LCD TV with it turned on to a static test pattern, High end amp, Foxtel etc) and got 378w usage at 250v and 374w at 230v. So yes, higher voltage did lead to 4w voltage rise or about 1% more power. With everything in standby mode, the readings were both 45w (ie no difference). But in truth even the 4w different when turned on is well within the margin for error for the test I did, though I suspect it is reasonably accurate and reasonable representation of what others would see with similar relatively modern equipment. But maybe you have some very inefficient appliances (cheaper, or maybe designed without efficiency in mind…eg very expensive audio file equipment where low noise is the main design criteria and efficiency does not get a look in).

While I don’t doubt your observations on your TV scenario and the longevity of you light globes, I suspect your observations will not necessarily be widely replicated. ie most people have voltages at the higher end of the range similar to you, and I suspect most people typically get well more than 500 hours out of LED and CFL. There are some pretty crap power supplies in a lot of devices (though better than they used to be 10 years ago), so maybe your TV observations are wider than I would guess, but I suspect you observations would be very much an outlier.

I assume you are using you are using your auto-transformer for appliance longevity and not to save power, because my guess is it will likely be loosing more power in efficiency losses than the 30w you might be saving running TV after the transformer with less power? But I would be interested in your experiences, because I am always interested in the holly grail of a 100% efficient voltage regulator.

An excellent and timely article.

We had our 6.5kW system with a 5.5kW SMA inverter installed early last year, I am not an electrically qualified person so it took me a while to get my head around understanding all this stuff. Anyway, for several months we were plagued with overvoltage tripping the system out, numerous times per day. It took some to-ing and fro-ing with both SAPN who turned the tap down a bit, and our installer who upped the settings on the inverter, and by the beginning of this year we were finally getting an ideal yield curve on fine days, flattening out at the mandatory 5kW output limit. I do understand that inverter voltages need to be slightly higher than the grid voltage at the supply point.

So our immediate issues are resolved, but I have continued to log the AC voltages at the inverter several times every day, and during the day before and after noon the voltages almost routinely go over 250 volts, and quite often over the standard max of 253v, and from time to time over 255v!

Rarely if ever do voltages drop below 240v except at night.

A couple of points emerge.

It is some years now since the Australian standard voltage was reduced from 240v to 230v, with tolerance of between 216v and 253v. It is obvious that SAPN (and for all I know other suppliers too) have done nothing to reduce voltages to that new lower band. Durting the day they are nudging up around and exceeding the high end most the time.

Secondly, an engineer friend of mine tells me that, except with thermostatically controlled appliances, supply at higher voltages means the consumer is buying more electricity than he/she needs to. This might be only small beer for one domestic consumer, but if you add in all consumers including industrial consumers, it means that the utility companies are selling WAAAY more electricity and hence generating WAAAY more revenue than would be the case if voltages were kept down around standards. No wonder they would be tardy about reducing grid voltages. If this is actually so, then it is a scandal.

Would be interested to hear views on this issue.

Finn Peacock, I’m going to email you about this issue too.. Cheers

In theory any element type heater (non inductor type loads) will draw more current if above the appliance rated voltage. If it’s a heater then it probably won’t really matter as the room will warm up quicker and the thermostat will switch in and be on for less time if it has one but stress on the components is an issue.

If using Inductor type loads such as Switch Mode Power Supplies (SMPS) then it is more complicated but modern TVs and appliances use SMPS these days and they can operate on a wide range of input voltage by adjusting the Pulse Width Modulation (PWM) to generate the required power. Modern plug packs (Wall Warts) use SMPS technology. Also modern Air Cond and modern fridges also use Inverters I believe are based on this technology. I never use element heaters in Winter and just use the Inverter Airconditioner instead.

If you have an element Hot Water Service then it also would just heat up a bit faster and cost the same in the end.

Modern lighting like LEDs depending on how they are powered may make a difference. If the LED light uses SMPS circuit that’s good, and probably the same with CFLs.